Getting Started with Risk Management

Learn how to evolve a sustainable and repeatable RIMS program.

source : http://is.gd/ZZtBCg

While geotags are the most definitive location information a tweet can have, tweets can also have plenty more salient information: hashtags, FourSquare check-ins, or text references to certain cities or states, to name a few. The authors of the paper created their algorithm by analyzing the content of tweets that did have geotags and then searching for similarities in content in tweets without geotags to assess where they might have originated from. Of a body of 1.5 million tweets, 90 percent were used to train the algorithm, and 10 percent were used to test it.The paper.

Both vocabulary and question comprehension were positively correlated with generalized trust. Those with the highest vocab scores were 34 percent more likely to trust others than those with the lowest scores, and someone who had a good perceived understanding of the survey questions was 11 percent more likely to trust others than someone with a perceived poor understanding. The correlation stayed strong even when researchers controlled for socio-economic class.Full study results.

This study, too, found a correlation between trust and self-reported health and happiness. The trusting were 6 percent more likely to say they were "very happy," and 7 percent more likely to report good or excellent health.

It's already possible to make some inferences about the appearance of crime suspects from their DNA alone, including their racial ancestry and some shades of hair colour. And in 2012, a team led by Manfred Kayser of Erasmus University Medical Center in Rotterdam, the Netherlands, identified five genetic variants with detectable effects on facial shape. It was a start, but still a long way from reliable genetic photofits.If I had to guess, I'd imagine this kind of thing is a couple of decades away. But with a large enough database of genetic data, it's certainly possible.

To take the idea a step further, a team led by population geneticist Mark Shriver of Pennsylvania State University and imaging specialist Peter Claes of the Catholic University of Leuven (KUL) in Belgium used a stereoscopic camera to capture 3D images of almost 600 volunteers from populations with mixed European and West African ancestry. Because people from Europe and Africa tend to have differently shaped faces, studying people with mixed ancestry increased the chances of finding genetic variants affecting facial structure.

Kayser's study had looked for genes that affected the relative positions of nine facial "landmarks", including the middle of each eyeball and the tip of the nose. By contrast, Claes and Shriver superimposed a mesh of more than 7000 points onto the scanned 3D images and recorded the precise location of each point. They also developed a statistical model to consider how genes, sex and racial ancestry affect the position of these points and therefore the overall shape of the face.

Next the researchers tested each of the volunteers for 76 genetic variants in genes that were already known to cause facial abnormalities when mutated. They reasoned that normal variation in genes that can cause such problems might have a subtle effect on the shape of the face. After using their model to control for the effects of sex and ancestry, they found 24 variants in 20 different genes that seemed to be useful predictors of facial shape (PLoS Genetics, DOI: 10.1371/journal.pgen.1004224).

Reconstructions based on these variants alone aren't yet ready for routine use by crime labs, the researchers admit. Still, Shriver is already working with police to see if the method can help find the perpetrator in two cases of serial rape in Pennsylvania, for which police are desperate for new clues.

The Huawei revelations are devastating rebuttals to hypocritical U.S. complaints about Chinese penetration of U.S. networks, and also make USG protestations about not stealing intellectual property to help U.S. firms' competitiveness seem like the self-serving hairsplitting that it is. (I have elaborated on these points many times and will not repeat them here.) "The irony is that exactly what they are doing to us is what they have always charged that the Chinese are doing through us," says a Huawei Executive.This isn't to say that the Chinese are not targeting foreign networks through Huawei equipment; they almost certainly are.

At the outset, we think it is important for IBM to clearly state some simple facts:To which we ask:

- IBM has not provided client data to the National Security Agency (NSA) or any other government agency under the program known as PRISM.

- IBM has not provided client data to the NSA or any other government agency under any surveillance program involving the bulk collection of content or metadata.

- IBM has not provided client data stored outside the United States to the U.S. government under a national security order, such as a FISA order or a National Security Letter.

- IBM does not put "backdoors" in its products for the NSA or any other government agency, nor does IBM provide software source code or encryption keys to the NSA or any other government agency for the purpose of accessing client data.

- IBM has and will continue to comply with the local laws, including data privacy laws, in all countries in which it operates.

Asked whether two unfamiliar photos of faces show the same person, a human being will get it right 97.53 percent of the time. New software developed by researchers at Facebook can score 97.25 percent on the same challenge, regardless of variations in lighting or whether the person in the picture is directly facing the camera.Human brains are optimized for facial recognition, which makes this even more impressive.

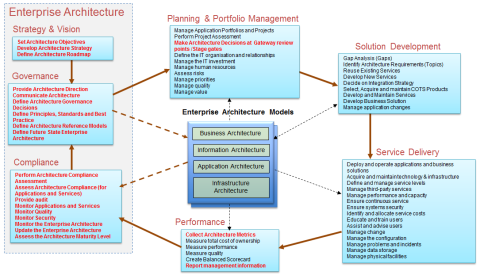

| COBIT5 reference | Process |

| APO03 | Managing Enterprise Architecture |

| APO02 | Define Strategy (in this context this usually means the IT strategy) |

| APO04 | Manage Innovation (via the Enterprise Architecture Governance Board) |

| BAI08 | Manage Knowledge (via the EA Repository) |

| BAI06 | Manage Changes (i.e. Strategic changes and IT enabled Business changes that drive the future state enterprise architecture) |

| MEA03 | Monitor and assess compliance with external requirements (via the Architecture Governance Board) |

| APO05 | Manage Portfolios (with EA Roadmap) |

| APO011 | Manage Quality (via EA Appraisals) |

| APO012 | Manage Risk (via EA Appraisals) |

| EDM01 | Set and Maintain Governance Framework |

| EDM02 | Ensure Value Optimisation |

| EDM03 | Ensure Risk Optimisation |

In testimony before Congress, Target has said that it was only after the U.S. Department of Justice notified the retailer about the breach in mid-December that company investigators went back to figure out what happened. What it hasn't publicly revealed: Poring over computer logs, Target found FireEye's alerts from Nov. 30 and more from Dec. 2, when hackers installed yet another version of the malware. Not only should those alarms have been impossible to miss, they went off early enough that the hackers hadn't begun transmitting the stolen card data out of Target's network. Had the company's security team responded when it was supposed to, the theft that has since engulfed Target, touched as many as one in three American consumers, and led to an international manhunt for the hackers never would have happened at all.

Everything we've seen about QUANTUM and other internet activity can be replicated with a surprisingly moderate budget, using existing tools with just a little modification. The biggest limitation on QUANTUM is location: The attacker must be able to see a request which identifies the target. Since the same techniques can work on a Wi-Fi network, a $50 Raspberry Pi, located in a Foggy Bottom Starbucks, can provide any country, big and small, with a little window of QUANTUM exploitation. A foreign government can perform the QUANTUM attack NSA-style wherever your traffic passes through their country.Moreover, until we fix the underlying Internet architecture that makes QUANTUM attacks possible, we are vulnerable to all of those attackers.

And that's the bottom line with the NSA's QUANTUM program. The NSA does not have a monopoly on the technology, and their widespread use acts as implicit permission to others, both nation-state and criminal.

I think there's a good case to be made for security as an exercise in public health. It sounds weird at first, but the parallels are fascinating and deep and instructive. Last year, a talk about all the ways that insecure computers put us all at risk, a woman in the audience put up her hand and said, "Well, you've scared the hell out of me. Now what do I do? How do I make my computers secure?"

And I had to answer: "You can't. No one of us can. I was a systems administrator 15 years ago. That means that I'm barely qualified to plug in a WiFi router today. I can't make my devices secure and neither can you. Not when our governments are buying up information about flaws in our computers and weaponising them as part of their crime-fighting and anti-terrorism strategies. Not when it is illegal to tell people if there are flaws in their computers, where such a disclosure might compromise someone's anti-copying strategy.

But: If I had just stood here and spent an hour telling you about water-borne parasites; if I had told you about how inadequate water-treatment would put you and everyone you love at risk of horrifying illness and terrible, painful death; if I had explained that our very civilisation was at risk because the intelligence services were pursuing a strategy of keeping information about pathogens secret so they can weaponise them, knowing that no one is working on a cure; you would not ask me 'How can I purify the water coming out of my tap?'"

Because when it comes to public health, individual action only gets you so far. It doesn't matter how good your water is, if your neighbour's water gives him cholera, there's a good chance you'll get cholera, too. And even if you stay healthy, you're not going to have a very good time of it when everyone else in your country is stricken and has taken to their beds.

If you discovered that your government was hoarding information about water-borne parasites instead of trying to eradicate them; if you discovered that they were more interested in weaponising typhus than they were in curing it, you would demand that your government treat your water-supply with the gravitas and seriousness that it is due.

For years, said Ms Khudari, Kiln and many other syndicates had offered cover for data breaches, to help companies recover if attackers penetrated networks and stole customer information. Now, she said, the same firms were seeking multi-million pound policies to help them rebuild if their computers and power-generation networks were damaged in a cyber-attack.Insurance is an excellent pressure point to influence security.

"They are all worried about their reliance on computer systems and how they can offset that with insurance," she said.

Any company that applies for cover has to let experts employed by Kiln and other underwriters look over their systems to see if they are doing enough to keep intruders out.

Assessors look at the steps firms take to keep attackers away, how they ensure software is kept up to date and how they oversee networks of hardware that can span regions or entire countries.

Unfortunately, said Ms Khudari, after such checks were carried out, the majority of applicants were turned away because their cyber-defences were lacking.

One presentation outlines how the NSA

performs “industrial-scale exploitation” of computer networks across the

world.

One presentation outlines how the NSA

performs “industrial-scale exploitation” of computer networks across the

world.

RAGEMASTER (TS//SI//REL TO USA,FVEY) RF retro-reflector that provides an enhanced radar cross-section for VAGRANT collection. It's concealed in a standard computer video graphics array (VGA) cable between the video card and the video monitor. It's typically installed in the ferrite on the video cable.Page, with graphics, is here. General information about TAO and the catalog is here.

(U) Capabilities

(TS//SI//REL TO USA,FVEY) RAGEMASTER provides a target for RF flooding and allows for easier collection of the VAGRANT video signal. The current RAGEMASTER unit taps the red video line on the VGA cable. It was found that, empirically, this provides the best video return and cleanest readout of the monitor contents.

(U) Concept of Operation

(TS//SI//REL TO USA,FVEY) The RAGEMASTER taps the red video line between the video card within the desktop unit and the computer monitor, typically an LCD. When the RAGEMASTER is illuminated by a radar unit, the illuminating signal is modulated with the red video information. This information is re-radiated, where it is picked up at the radar, demodulated, and passed onto the processing unit, such as a LFS-2 and an external monitor, NIGHTWATCH, GOTHAM, or (in the future) VIEWPLATE. The processor recreates the horizontal and vertical sync of the targeted monitor, thus allowing TAO personnel to see what is displayed on the targeted monitor.

Unit Cost: $30

Status: Operational. Manufactured on an as-needed basis. Contact POC for availability information.