Orchestrating Containers is a pretty complex task and there is a lot of

work ongoing to solve this particular problem. There are big companies,

startups as well as Opensource projects involved with this work. There

are many different technologies and projects ongoing that got me really

confused when I started looking at this. In this blog, I have tried to

break down the Docker orchestration problem into smaller pieces and have

tried to map different existing/developing solutions into the smaller

pieces. Considering that the technologies are evolving and that my

knowledge in this area is limited, this blog might need updates and

corrections as we move forward. Also, I might have missed few

technologies as well as companies..

Following are the orchestration blocks as I see it. Not all blocks are needed for the complete solution.

Container application definition:

A typical distributed application would span multiple containers. There will be sharing of storage volumes, environment variables, port numbers, services across these containers. There needs to be some sort of affinity defined where related containers needs to be placed in same host. Qos characteristics, cpu, memory usage needs to be defined for the containers. There has to be a definition format and an agent that parses the definition and creates the containers appropriately. Fig’s application definition file, AWS’s task definition for containers, GCE’s container manifest, Docker’s compose falls in this category.

Following is a FIG yaml file example that defines an application with web and db container.

Containers are typically deployed on a host of machines and we need to have a scheduler that takes care of efficient distribution as well as handling of machine failures. CoreOS’s fleet, Google’s Kubernetes, Mesosphere’s Mesos, Docker’s Swarm falls under this category. AWS seems to have their own cluster management solution. Openstack might use the cluster management solution available in Nova.

Container optimized VMs/linux distributions:

The companies having their own Linux distributions are targeting management of large cluster of Data center nodes and allowing them to be managed as a single node. These linux distributions have agents that talk to cluster manager. The agents take care of managing containers in the host that includes restart of containers on failure, performance monitoring, automatic discovery of container services. Examples are CoreOS, Mesosphere’s DCOS and Project Atomic. I also see that AWS and GCE are having their own container VM distribution where docker is pre-installed and some container management services are pre-installed in that VM.

Services:

Once we have the above building blocks in place, we can have services like load balancer, automatic scale up/scale down etc. which can extend into something like a PaaS.

Container VM:

This VM has Docker pre-installed and it also has the agent to interpret the manifest file that describes the containers that are part of a single application.

Kubernetes:

Kubernetes is a container management application across multiple hosts. This project was started initially by Google. Now its a Opensource project where multiple companies are actively involved. Kubernetes focuses on Cluster management, affinity management of related containers, high availability of containers, services like load balancing. There is also work ongoing to integrate Kubernetes with Mesos. In this joint project, as per my understanding, pod management will be done by Kubernetes and Cluster management will be done by Mesos.

Task definition handler:

In JSON format, task definition describes the containers in detail like memory, cpu, storage, networking requirement, relationships between containers and containers will be spawned and managed based on this definition.

Cluster manager and scheduler:

This module will manage cluster of EC2 hosts. AWS has its own scheduler, they also mention that there is a plan to allow customers to integrate with third party scheduler.

Machine:

Docker machine makes it easy to deploy Docker containers across any end system like bare-metal, VM, Cloud,

Swarm:

Docker swarm is a cluster manager for being able to deploy containers across multiple hosts. It will provide scheduler and high availability functionality.

Compose:

Docker compose will make it easy to define and manage a distributed application spanning multiple containers.

Problem statement:

Docker does a great job in packaging and transporting single containers. Following are specific problems we need to address:- Distributed Applications split between multiple containers.

- Manage a large number of containers both in terms of allocating the containers to the cluster of hosts as well as handling container failures.

Orchestration blocks:

Following are the orchestration blocks as I see it. Not all blocks are needed for the complete solution.

Container application definition:

A typical distributed application would span multiple containers. There will be sharing of storage volumes, environment variables, port numbers, services across these containers. There needs to be some sort of affinity defined where related containers needs to be placed in same host. Qos characteristics, cpu, memory usage needs to be defined for the containers. There has to be a definition format and an agent that parses the definition and creates the containers appropriately. Fig’s application definition file, AWS’s task definition for containers, GCE’s container manifest, Docker’s compose falls in this category.

Following is a FIG yaml file example that defines an application with web and db container.

web:

build: .

command: python app.py

ports:

- "5000:5000"

volumes:

- .:/code

links:

- redis

redis:

image: redis

Cluster management:Containers are typically deployed on a host of machines and we need to have a scheduler that takes care of efficient distribution as well as handling of machine failures. CoreOS’s fleet, Google’s Kubernetes, Mesosphere’s Mesos, Docker’s Swarm falls under this category. AWS seems to have their own cluster management solution. Openstack might use the cluster management solution available in Nova.

Container optimized VMs/linux distributions:

The companies having their own Linux distributions are targeting management of large cluster of Data center nodes and allowing them to be managed as a single node. These linux distributions have agents that talk to cluster manager. The agents take care of managing containers in the host that includes restart of containers on failure, performance monitoring, automatic discovery of container services. Examples are CoreOS, Mesosphere’s DCOS and Project Atomic. I also see that AWS and GCE are having their own container VM distribution where docker is pre-installed and some container management services are pre-installed in that VM.

Services:

Once we have the above building blocks in place, we can have services like load balancer, automatic scale up/scale down etc. which can extend into something like a PaaS.

Container integration with major public cloud vendors and Openstack:

We will look at how Containers are integrated with GCE, AWS, Openstack. Most of these integration are in trial stages and a production ready release will be available in the next few months.Google’s GCE Container integration:

Google has been using Containers for quite a long time for their internal projects. It was natural for them to expose the internal service for external consumption through their Google compute engine(GCE). This is in alpha release currently. As of now, the pricing seems to be only for the VMs and not for the containers itself. Following are the important components.Container VM:

This VM has Docker pre-installed and it also has the agent to interpret the manifest file that describes the containers that are part of a single application.

Kubernetes:

Kubernetes is a container management application across multiple hosts. This project was started initially by Google. Now its a Opensource project where multiple companies are actively involved. Kubernetes focuses on Cluster management, affinity management of related containers, high availability of containers, services like load balancing. There is also work ongoing to integrate Kubernetes with Mesos. In this joint project, as per my understanding, pod management will be done by Kubernetes and Cluster management will be done by Mesos.

AWS EC2 Container service:

EC2 container service is available only as a preview currently. The pricing is only for the EC2 instances and not for the containers. Based on their roadmap, it looks like AWS will integrate their higher level services like EBS, Cloud formation etc with containers. Following are some of the components of this service.Task definition handler:

In JSON format, task definition describes the containers in detail like memory, cpu, storage, networking requirement, relationships between containers and containers will be spawned and managed based on this definition.

Cluster manager and scheduler:

This module will manage cluster of EC2 hosts. AWS has its own scheduler, they also mention that there is a plan to allow customers to integrate with third party scheduler.

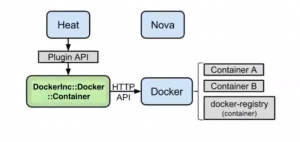

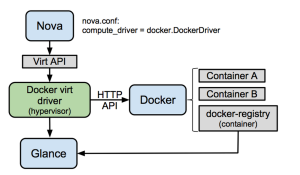

Openstack container integration:

Openstack has some Docker integration with Heat and Nova, there is work ongoing to complete the integration. Following are the block diagrams I found in Openstack wiki.

- Docker driver integrates Heat and Nova with Docker.

- With Heat integration, Container templates are included in Heat templates.

- Even though Nova container integration is available, container extensions are not yet available in Nova. This means that container features like linking containers, passing environment variables, creating and sharing docker volumes are not yet available making Nova integration slightly less useful. This is work in progress.

Docker’s native orchestration:

Even though there is lot of work ongoing around Docker to support Docker orchestration, Docker as a company felt that it will be good to provide the orchestration natively in Docker. Docker calls this as “Batteries included” approach where the default pieces of orchestration is included natively and Docker will make these pieces modular enough so that customers will be able to replace their own orchestration tool easily. For example, Docker will have a cluster manager natively and if the customer wants to replace Docker’s cluster manager with an open source cluster manager like Kubernetes, they will be able to do it easily. Following are components of Docker’s orchestration that was announced recently. Docker machine and swarm is available as a preview, Docker compose is at an earlier stage at this point.Machine:

Docker machine makes it easy to deploy Docker containers across any end system like bare-metal, VM, Cloud,

Swarm:

Docker swarm is a cluster manager for being able to deploy containers across multiple hosts. It will provide scheduler and high availability functionality.

Compose:

Docker compose will make it easy to define and manage a distributed application spanning multiple containers.

Final thoughts:

- Container orchestration is a complex problem with different moving parts and different vendors are approaching the problem differently. There are some vendors who are providing the complete solution which includes all Orchestration blocks that I mentioned in the first section and there are few vendors who focus on a single piece like Cluster manager, optimized hosts etc. It looks like everyone wants to keep their interfaces open so that a pluggable architecture would evolve where different modules can work nicely with each other. This is a good thing for endusers if this pluggable model works smoothly.

- VM and VM management has reached a good level of maturity. Even though containers have some specific features related to linking containers and the scale required is slightly different for containers compared to VM, I wonder if the VM orchestration solutions can be reused for containers?

- Data centers will have both VMs and Containers, each has their own use case. Would it make sense to orchestrate VMs and Container the same way and be able to define a distributed application that has both VMs and Containers as part of it?

- Networking is an implicit requirement of the infrastructure that is needed for container orchestration. Here again, I feel that we need to have a common mechanism between Containers and VMs.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.